前言

https://github.com/psvmc/ZChatGPTAPIProxy

申请代理

- 点击Use this template按钮创建一个新的代码库。

- 登录到Cloudflare控制台.

- 在帐户主页中,选择

pages>Create a project>Connect to Git - 选择你 Fork 的项目存储库,在

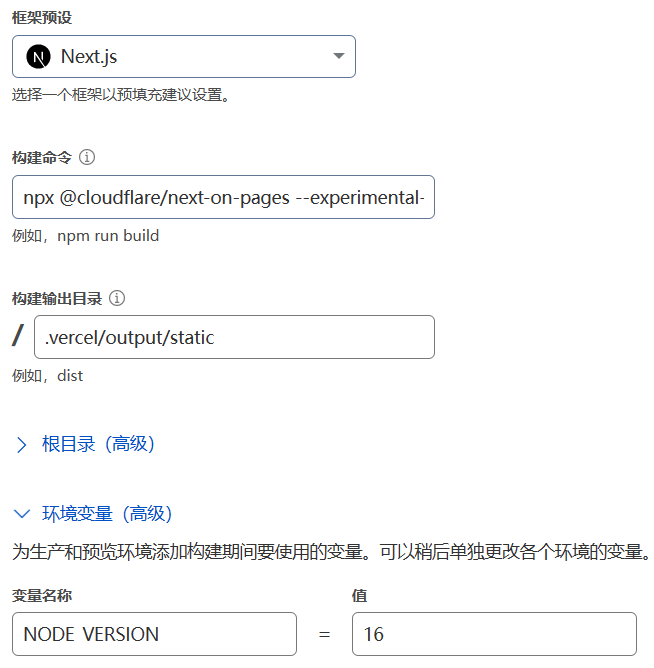

Set up builds and deployments部分中,选择Next.js作为您的框架预设。您的选择将提供以下信息。

一般默认即可

| Configuration option | Value |

|---|---|

| Production branch | main |

| Framework preset | next.js |

| Build command | npx @cloudflare/next-on-pages –experimental-minify |

| Build directory | .vercel/output/static |

在

Environment variables (advanced)添加一个参数

| Variable name | Value |

|---|---|

| NODE_VERSION | 16 |

点击Save and Deploy部署,然后点Continue to project即可看到访问域名

把官方接口的

https://api.openai.com替换为https://xxx.pages.dev/api即可 (https://xxx.pages.dev/api 为你的域名)

注意路径多了一个api

如图所示

自己服务器部署

安装GIT

1 | yum install -y git |

下载项目

1 | cd /data |

安装Node管理器

国内镜像

安装

1 | yum install -y curl |

卸载

1 | bash -c "$(curl -fsSL https://gitee.com/RubyMetric/nvm-cn/raw/main/uninstall.sh)" |

添加

1 | export NVM_NODEJS_ORG_MIRROR=https://npm.taobao.org/mirrors/node |

输入后,在终端中输入下面的命令使其生效,然后可以接着运行nvm命令

1 | source ~/.bashrc |

此时运行 查看所有可用版本

1 | nvm ls-remote |

安装Node16

1 | nvm install v16.20.2 |

npm换源

1 | # 查看配置 |

运行

1 | npm install |

接口调用

查看服务地址

JS直接调用

单次调用

1 | const requestOptions = { |

返回结果

1 | { |

流式调用

1 | let url = "https://zchatgptapiproxy.pages.dev/api/v1/chat/completions"; |

返回的数据

有数据时

1 | { |

结束时

1 | { |

NodeJS调用

添加依赖

1 | npm install chatgpt |

调用

1 | import { ChatGPTAPI } from 'chatgpt' |

Go调用

https://github.com/sashabaranov/go-openai

1 | go get github.com/sashabaranov/go-openai |

示例

1 | package main |

自定义URL

1 | config := openai.DefaultConfig("sk-o2JMCcPYmFJapl48iWyHT3BlbkFJYhP8WjNGom5uFc10100") |

官方接口测试

1 | export OPENAI_API_KEY="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" |

获取当前支持的模型

1 | curl https://api.openai.com/v1/models \ |

对话

1 | curl https://api.openai.com/v1/chat/completions \ |