安装K8S

环境

CentOS7.6

内存>2G

服务器两台及以上

| IP | 角色 | 安装软件 |

|---|---|---|

| 192.168.10.39 | k8s-master | docker flannel kubelet kube-apiserver kube-schduler kube-controller-manager |

| 192.168.10.40 | k8s-node01 | docker flannel kubelet kube-proxy |

镜像源

1 | cd /etc/yum.repos.d |

CentOS-Base.repo

1 | vi CentOS-Base.repo |

内容如下

1 | # CentOS-Base.repo |

epel-7.repo

1 | vi epel-7.repo |

如下

1 | [epel] |

docker-ce.repo

1 | vi docker-ce.repo |

如下

1 | [docker-ce-stable] |

kubernetes.repo

1 | vi kubernetes.repo |

如下

1 | [kubernetes] |

安装Docker

所有主机上均安装

安装Docker

1 | # 卸载旧版本(如果安装过旧版本的话) |

显示Docker版本

Docker version 18.06.1-ce, build e68fc7a

如果报错

获取 GPG 密钥失败:[Errno 14] curl#7 - “Failed to connect to 2600:9000:2003:ca00:3:db06:4200:93a1: Network is unreachable”

解决方法

查看系统版本

1 | cat /etc/redhat-release |

从 mirrors.163.com 找到系统对应密钥

1 | rpm --import http://mirrors.163.com/centos/RPM-GPG-KEY-CentOS-7 |

针对Docker客户端版本大于 1.10.0 的用户

创建或修改 /etc/docker/daemon.json 文件

1 | vi /etc/docker/daemon.json |

添加或修改

1 | { |

重启Docker

1 | systemctl daemon-reload |

环境初始化

所有主机上均安装

所有节点加载ipvs模块

1 | modprobe ip_vs |

关闭防火墙及selinux

1 | systemctl stop firewalld && systemctl disable firewalld |

关闭 swap 分区

1 | swapoff -a # 临时 |

设置主机名及配置hosts

192.168.10.39 上执行

1 | hostnamectl set-hostname k8s-master |

192.168.10.40 上执行

1 | hostnamectl set-hostname k8s-node01 |

在所有主机上上添加如下命令

1 | cat >> /etc/hosts << EOF |

内核调整,将桥接的IPv4流量传递到iptables的链

1 | cat <<EOF | sudo tee /etc/modules-load.d/k8s.conf |

设置系统时区并同步时间服务器

1 | yum install -y ntpdate && \ |

检查所需端口

控制平面节点

| 协议 | 方向 | 端口范围 | 作用 | 使用者 |

|---|---|---|---|---|

| TCP | 入站 | 6443 | Kubernetes API 服务器 | 所有组件 |

| TCP | 入站 | 2379-2380 | etcd 服务器客户端 API | kube-apiserver, etcd |

| TCP | 入站 | 10250 | Kubelet API | kubelet 自身、控制平面组件 |

| TCP | 入站 | 10251 | kube-scheduler | kube-scheduler 自身 |

| TCP | 入站 | 10252 | kube-controller-manager | kube-controller-manager 自身 |

工作节点

| 协议 | 方向 | 端口范围 | 作用 | 使用者 |

|---|---|---|---|---|

| TCP | 入站 | 10250 | Kubelet API | kubelet 自身、控制平面组件 |

| TCP | 入站 | 30000-32767 | NodePort 服务 | 所有组件 |

安装K8S

所有主机上均安装

Ubuntu

1 | apt-get update && apt-get install -y apt-transport-https |

CentOS

1 | cat <<EOF | sudo tee /etc/yum.repos.d/kubernetes.repo |

如果安装前有旧版本先调用卸载

1 | kubeadm reset |

查看

1 | kubectl version --client |

你需要在每台机器上安装以下的软件包:

kubeadm:用来初始化集群的指令。kubelet:在集群中的每个节点上用来启动 Pod 和容器等。kubectl:用来与集群通信的命令行工具。

准备镜像

获取kubeadm需要的所有镜像

1 | kubeadm config images list |

结果

k8s.gcr.io/kube-apiserver:v1.20.4

k8s.gcr.io/kube-controller-manager:v1.20.4

k8s.gcr.io/kube-scheduler:v1.20.4

k8s.gcr.io/kube-proxy:v1.20.4

k8s.gcr.io/pause:3.2

k8s.gcr.io/etcd:3.4.13-0

k8s.gcr.io/coredns:1.7.0

编写脚本批量pull,在文本中输入:

1 | vi kubeadm_config_images_list.sh |

输入

1 |

|

其中docker tag用于标记本地镜像,将其归入某一仓库(使用阿里镜像避免翻墙).

保存脚本kubeadm_config_images_list.sh后运行:

1 | sudo chmod +x kubeadm_config_images_list.sh |

让其变得可执行,然后在当前文件夹运行:

1 | ./kubeadm_config_images_list.sh |

即可.

部署Kubernetes Master

只在Master节点执行

这里的apiserve需要修改成自己的master地址

执行

1 | # 删除之前的配置 |

成功提示信息

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/configAlternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run “kubectl apply -f [podnetwork].yaml” with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.10.39:6443 –token am6y16.jfvsunhm28h7nn92 \

–discovery-token-ca-cert-hash sha256:21c1254f9da1bf4c46a6841b603ca1c21193eb32c27639ebf775f6fab8ba39de

根据提示执行

1 | mkdir -p $HOME/.kube |

root用户执行

1 | export KUBECONFIG=/etc/kubernetes/admin.conf |

报错

The HTTP call equal to ‘curl -sSL http://localhost:10248/healthz' failed with error

原因是

使用minikube部署测试环境时,它会修改kubeadm的配置文件,删除即可

解决方法 删除原来的配置文件

1 | rm -rf /var/lib/etcd |

查看错误

1 | systemctl status kubelet |

重新生成token

只在Master节点执行

token失效时执行

默认token的有效期为24小时,当过期之后,该token就不可用了,

如果后续有nodes节点加入,解决方法如下:

重新生成新的token

1 | kubeadm token create |

显示

vyaztf.snjf2za5636nekcv

查看列表

1 | kubeadm token list |

获取ca证书sha256编码hash值

1 | openssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt | openssl rsa -pubin -outform der 2>/dev/null | openssl dgst -sha256 -hex | sed 's/^.* //' |

显示

21c1254f9da1bf4c46a6841b603ca1c21193eb32c27639ebf775f6fab8ba39de

在Node节点就可以这样加入

1 | kubeadm join 192.168.10.39:6443 --token vyaztf.snjf2za5636nekcv \ |

加入Kubernetes Node

在 Node 节点执行

使用kubeadm join 注册Node节点到Matser

kubeadm join 的内容,在上面kubeadm init 已经生成好了

1 | kubeadm join 192.168.10.39:6443 --token am6y16.jfvsunhm28h7nn92 \ |

查看是否成功

在Master节点执行

1 | kubectl get nodes |

安装网络插件

只需要在Master 节点执行

执行

1 | wget https://raw.githubusercontent.com/coreos/flannel/a70459be0084506e4ec919aa1c114638878db11b/Documentation/kube-flannel.yml |

修改镜像地址:(有可能默认不能拉取,确保能够访问到quay.io这个registery,否则修改如下内容)

1 | vi kube-flannel.yml |

进入编辑,把106行,120行的内容,替换如下image,替换之后查看如下为正确

image 修改为 lizhenliang/flannel:v0.11.0-amd64

1 | cat -n kube-flannel.yml|grep lizhenliang/flannel:v0.11.0-amd64 |

出现

106 image: lizhenliang/flannel:v0.11.0-amd64

120 image: lizhenliang/flannel:v0.11.0-amd64

应用

1 | kubectl apply -f kube-flannel.yml |

出现

root 2032 2013 0 21:00 ? 00:00:00 /opt/bin/flanneld –ip-masq –kube-subnet-mgr

查看集群的node状态,安装完网络工具之后,只有显示如下状态,所有节点全部都Ready好了之后才能继续后面的操作

1 | kubectl get nodes |

结果

NAME STATUS ROLES AGE VERSION

k8s-master Ready master 37m v1.15.0

k8s-node01 Ready5m22s v1.15.0

查看所有

1 | kubectl get pod -n kube-system |

只有全部都为1/1则可以成功执行后续步骤,如果flannel需检查网络情况,重新进行如下操作

1 | kubectl delete -f kube-flannel.yml |

然后重新wget,然后修改镜像地址,然后

1 | kubectl apply -f kube-flannel.yml |

报错处理

如果使用1.20.x版本就会报错

1 | yum install -y kubelet-1.20.4 kubeadm-1.20.4 kubectl-1.20.4 |

错误

unable to recognize “kube-flannel.yml”: no matches for kind “DaemonSet” in version “extensions/v1beta1”

原因:DaemonSet、Deployment、StatefulSet 和 ReplicaSet 在 v1.16 中将不再从 extensions/v1beta1、apps/v1beta1 或 apps/v1beta2 提供服务

解决方法是:

将yml配置文件内的api接口修改为

apps/v1导致原因为之间使用的kubernetes 版本是

1.15.x版本,1.16.x 及以上版本放弃部分API支持

所以有两种办法

- 使用旧版本的k8s

- 修改配置

即上面配置中的

1 | apiVersion: extensions/v1beta1 |

修改为

1 | apiVersion: apps/v1 |

把

1 | apiVersion: rbac.authorization.k8s.io/v1beta1 |

修改为

1 | apiVersion: rbac.authorization.k8s.io/v1 |

把

1 | spec: |

所有DaemonSet下template的同级填上

1 | selector: |

修改之后的全部配置

1 | --- |

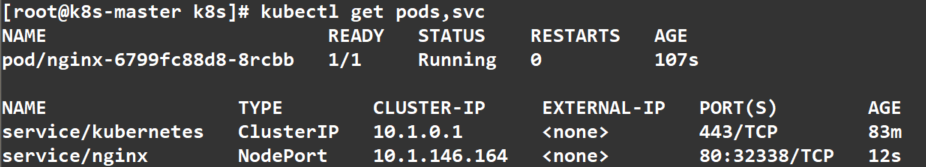

测试集群

在Kubernetes集群中创建一个pod,然后暴露端口,验证是否正常访问:

1 | kubectl create deployment nginx --image=nginx |

出现

deployment.apps/nginx created

service

1 | kubectl expose deployment nginx --port=80 --type=NodePort |

出现

service/nginx exposed

查看

1 | kubectl get pods,svc |

出现

访问地址:http://NodeIP:Port

此例就是:http://192.168.10.39:32338

部署 Dashboard

官网地址 https://github.com/kubernetes/dashboard

提示:

一定要注意Dashboard和Kubernetes的版本要匹配,否则无法获取数据

下载

1 | wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.2.0/aio/deploy/recommended.yaml |

修改内容:

1 | kind: Service |

设置证书

1 | cd /etc/kubernetes/pki/ |

回到这个yaml的路径下修改

1 | vi kubernetes-dashboard.yaml |

修改 dashboard-controller.yaml 文件,在args下面增加证书两行

1 | - --tls-key-file=dashboard-key.pem |

下载所需镜像

1 | docker pull kubernetesui/dashboard:v2.2.0 |

执行

1 | kubectl apply -f recommended.yaml |

在浏览器访问地址: https://NodeIP:30001

创建用户并绑定默认cluster-admin管理员集群角色:

1 | kubectl create serviceaccount dashboard-admin -n kube-system |

显示

serviceaccount/dashboard-admin created

输入

1 | kubectl create clusterrolebinding dashboard-admin --clusterrole=cluster-admin --serviceaccount=kube-system:dashboard-admin |

显示

clusterrolebinding.rbac.authorization.k8s.io/dashboard-admin created

获取token

1 | kubectl describe secrets -n kube-system $(kubectl -n kube-system get secret | awk '/dashboard-admin/{print $1}') |

显示

1 | Name: dashboard-admin-token-qkhrg |

就可以通过上面的token登录了

查看所有

1 | kubectl get svc --all-namespaces |

删除

1 | kubectl delete -f recommended.yaml |

卸载

卸载服务

1 | kubeadm reset |

停止所有容器

1 | docker stop `docker ps -a -q` |

删除所有容器

1 | docker rm `docker ps -a -q` |

删除所有的镜像

1 | docker rmi --force `docker images -q` |