安装Docker

K8S是基于Docker的,所以我们要先安装Docker

https://www.psvmc.cn/article/2018-12-13-docker-install.html

安装K8S

- 配置

/etc/hosts文件,将所有机器配置成通过主机名可以访问。 - 如果环境中有代理,请一定要在环境变量中将no_proxy配置正确。

- master还需要执行下面的命令

k8s.conf

1 | cat <<EOF | sudo tee /etc/modules-load.d/k8s.conf |

k8s.conf

1 | cat <<EOF | sudo tee /etc/sysctl.d/k8s.conf |

配置生效

1 | sudo sysctl --system |

Ubuntu

1 | apt-get update && apt-get install -y apt-transport-https |

CentOS

kubernetes.repo

1 | cat <<EOF | sudo tee /etc/yum.repos.d/kubernetes.repo |

selinux

1 | # 将 SELinux 设置为 permissive 模式(相当于将其禁用) |

安装

1 | yum install -y kubelet-1.20.4 kubeadm-1.20.4 kubectl-1.20.4 |

查看

1 | kubectl version --client |

你需要在每台机器上安装以下的软件包:

kubelet:在集群中的每个节点上用来启动 Pod 和容器等。kubectl:用来与集群通信的命令行工具。kubeadm:用来初始化集群的指令。

Minikube

minikube的下载和启动

minikube 是一个工具, 能让你在本地运行 Kubernetes。 minikube 在你本地的个人计算机(包括 Windows、macOS 和 Linux PC)运行一个单节点的 Kubernetes 集群,以便你来尝试 Kubernetes 或者开展每天的开发工作。

注意

这个创建的是单节点的,这个节点是创建一个虚拟机,依赖外部的vritualbox的方式。

Github:https://github.com/kubernetes/minikube

下载

1 | curl -Lo minikube http://kubernetes.oss-cn-hangzhou.aliyuncs.com/minikube/releases/v1.17.1/minikube-linux-amd64 |

启动

1 | minikube start --vm-driver=none --image-mirror-country='cn' |

注意

--image-mirror-country='cn'这个选项是专门为中国准备的,这个选项会让你使用阿里云的镜像仓库.

卸载

1 | minikube stop |

Kind

Kind 文档 https://kind.sigs.k8s.io/docs/user/quick-start/

Kind 特点?

基于 Docker 而不是虚拟化

Kind 不是打包一个虚拟化镜像,而是直接将 K8S 组件运行在 Docker。带来了什么好处呢?

- 不需要运行 GuestOS 占用资源更低。

- 不基于虚拟化技术,可以在 VM 中使用。

- 文件更小,更利于移植。

支持多节点 K8S 集群和 HA

Kind 支持多角色的节点部署,你可以通过配置文件控制你需要几个 Master 节点,几个 Worker 节点,以更好的模拟生产中的实际环境。

但是

因为Kind运行在容器内,所以即使配置了nodePort,也无法外部访问。

所以还是建议测试时使用

Minikube

安装

查看环境变量

1 | echo $PATH |

下载

1 | curl -Lo ./kind https://kind.sigs.k8s.io/dl/v0.10.0/kind-linux-amd64 |

查看安装情况

1 | kind version |

最简单的情况,我们使用一条命令就能创建出一个单节点的 K8S 环境

1 | kind create cluster |

查看信息

1 | kubectl cluster-info --context kind-kind |

显示信息

Kubernetes control plane is running at https://127.0.0.1:35517

KubeDNS is running at https://127.0.0.1:35517/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy

删除

1 | kind delete cluster |

可是呢,默认配置有几个限制大多数情况是不满足实际需要的,默认配置的主要限制如下:

- APIServer 只监听了 127.0.0.1,也就意味着在 Kind 的本机环境之外无法访问 APIServer

- 由于国内的网络情况关系,Docker Hub 镜像站经常无法访问或超时,会导致无法拉取镜像或拉取镜像非常的慢

这边提供一个配置文件来解除上诉的限制:

创建配置文件

1 | vi kind.yaml |

内容如下

1 | kind: Cluster |

注意

apiServerAddress配置局域网 IP 或想监听的 IPhttps://tiaudqrq.mirror.aliyuncs.com配置 Docker Hub 加速镜像站点

之后运行

1 | # 先删除之前的 |

如果长时间卡在 Ensuring node image (kindest/node:v1.20.2) 这个步骤,可以使用

1 | docker pull kindest/node:v1.20.2 |

来得到镜像拉取进度条。

查看信息

1 | kubectl cluster-info --context kind-kind |

可以看到

Kubernetes control plane is running at https://192.168.7.11:34418

KubeDNS is running at https://192.168.7.11:34418/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy

查看节点

1 | kubectl get nodes |

查看集群运行情况

1 | kubectl get po -n kube-system |

实践

Deployment 实践

首先配置好 Deployment 的配置文件(这里用的是 nginx 镜像)

创建文件夹

1 | mkdir /root/k8s |

app.yaml

1 | apiVersion: apps/v1 |

通过 kubectl 命令创建服务

创建

1 | kubectl delete -f app.yaml |

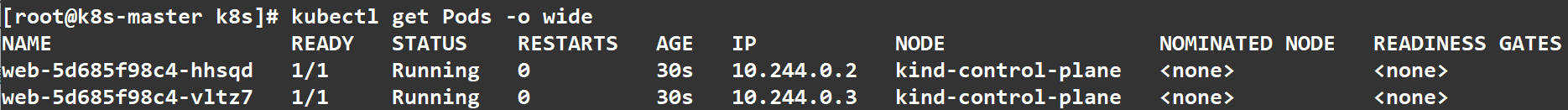

等待一会后,查看 Pod 调度、运行情况。

我看可以看到 Pod 的名字、运行状态、Pod 的 ip、还有所在Node的名字等信息

1 | kubectl get Pods -o wide |

启动成功 READY 的状态会是1/1

如果不是,查看pod启动失败的原因

1 | kubectl describe pod web |

删除

1 | kubectl delete -f app.yaml |

Service 实践

k8s中创建service时,需要指定type类型,可以分别指定ClustrerIP,NodePort,LoadBalancer三种。

- ClusterIP服务是k8s默认的服务。给你一个集群内的服务,集群内的其他应用都可以访问该服务,集群外部无法访问它。

- NodePort 服务是引导外部流量到你的服务的最原始方式。NodePort,正如这个名字所示,在所有节点(虚拟机)上开放一个特定端口,任何发送到该端口的流量都被转发到对应服务。

- LoadBalancer 如果你想要直接暴露服务,这就是默认方式。所有通往你指定的端口的流量都会被转发到对应的服务。它没有过滤条件,没有路由等。这意味着你几乎可以发送任何种类的流量到该服务,像 HTTP,TCP,UDP,Websocket,gRPC 或其它任意种类。

LoadBalancer 和NodePort 其实是同一种方式,区别在于LoadBalancer 比 NodePort多了一步,就是调用cloud provider去创建LB来向节点导流。

通过上面创建的 Deployment 我们还没法合理的访问到应用,下面我们就创建一个 service 作为我们访问应用的入口。

访问此服务,可以通过以下几种方式:

- K8s集群外部,访问

External-IP:Port - K8s集群外部,访问

NodeIP:NodePort - K8s集群内部,访问

Cluster-IP:Port - K8s集群内部,访问

PodIP:TargetPort

首先创建service配置

1 | vi service.yaml |

service.yaml

1 | apiVersion: v1 |

创建服务

1 | kubectl create -f service.yaml |

提示创建成功

service/web created

查看服务

1 | kubectl get service |

结果

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1443/TCP 10m

web ClusterIP 10.96.145.2338888/TCP 2m31s

接下来就可以在任意节点通过ClusterIP负载均衡的访问后端应用了

查看

1 | kubectl describe service web |

外部访问

为了方便测试可以先把防火墙关了

1 | systemctl stop firewalld |

这里提供四种方式

代理该方式只建议测试时使用。端口转发该方式只建议测试时使用。NodePort该方式可以配合Nginx使用,可用于正式环境。ingress-nginx该方式就相当于把Nginx集成到了K8S这种,可用于正式环境。

代理

启动 Kubernetes proxy 模式:

1 | kubectl proxy --address='0.0.0.0' --accept-hosts='^*$' --port=8080 |

注意

--address='0.0.0.0'允许远程访问--accept-hosts='^*$'设置API server接收所有主机的请求

这样你可以通过Kubernetes API,使用如下模式来访问这个服务:

1 | http://ip:8080/api/v1/namespaces/[namespace-name]/services/[service-name]/proxy |

要访问我们上面定义的服务,你可以使用如下地址:

1 | http://192.168.7.11:8080/api/v1/namespaces/default/services/web/proxy/ |

端口转发

通过 kubectl port-forward 端口转发的方式访问 K8S 中的应用

1 | kubectl port-forward --address 0.0.0.0 service/web 30010:80 |

注意

一定要添加

--address 0.0.0.0,否则只能本地访问。

查看端口

1 | netstat -nltp | grep 30010 |

访问

1 | curl http://localhost:30010 |

通过局域网访问

NodePort

注意这种方式在使用Kind的时候无效,但是实际是可用的。使用minikube就可以。

1 | vi service3.yaml |

service3.yaml

1 | apiVersion: v1 |

运行

1 | kubectl delete -f service3.yaml |

查看服务

1 | kubectl get service |

访问

ingress-nginx

- ingress是k8s集群的请求入口,可以理解为service的service

- 通常说的ingress一般包括ingress资源对象及ingress-controller两部分组成

- ingress-controller有多种实现,社区原生的是ingress-nginx,根据具体需求选择

- ingress自身的暴露有多种方式,需要根据基础环境及业务类型选择合适的方式

官网

https://github.com/kubernetes/ingress-nginx

ingress-controller

下载

1 | wget https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v1.1.1/deploy/static/provider/cloud/deploy.yaml |

配置文件中我们可以搜索kind: Deployment,可以看到使用到的端口

注意

官方的配置文件中的镜像下载不下来,这里提供一个更换过镜像地址的配置文件

ingress-controller.yaml

1 | apiVersion: v1 |

运行

1 | kubectl apply -f ingress-controller.yaml |

查看运行状态

1 | kubectl get pods --namespace=ingress-nginx |

ingress配置

部署完ingress-controller,接下来就按照测试的需求来创建ingress资源。

也就是我们的转发的规则

ingresstest.yaml

1 | apiVersion: networking.k8s.io/v1 |

部署资源

1 | kubectl apply -f ingresstest.yaml |

如果报错

Internal error occurred: failed calling webhook “validate.nginx.ingress.kubernetes.io”: Post https://ingress-nginx-controller-admission.kube-system.svc:443/networking/v1beta1/ingresses? timeout=10s: dial tcp 10.0.0.5:8443: connect: connection refused

原因分析

我刚开始使用yaml的方式创建nginx-ingress,之后删除了它创建的命名空间以及 clusterrole and clusterrolebinding ,但是没有删除ValidatingWebhookConfiguration ingress-nginx-admission,这个ingress-nginx-admission是在yaml文件中安装的。当我再次使用helm安装nginx-ingress之后,创建自定义的ingress就会报这个错误。

解决方案

使用下面的命令查看 webhook

1 | kubectl get validatingwebhookconfigurations |

删除ingress-nginx-admission

1 | kubectl delete -A ValidatingWebhookConfiguration ingress-nginx-admission |

域名映射

找到hosts文件

1 | C:\Windows\System32\drivers\etc |

添加 以下内容并保存即可恢复

1 | 192.168.7.11 www.abc.com |

再ping一下

1 | ping www.abc.com |

这时候就可以访问了

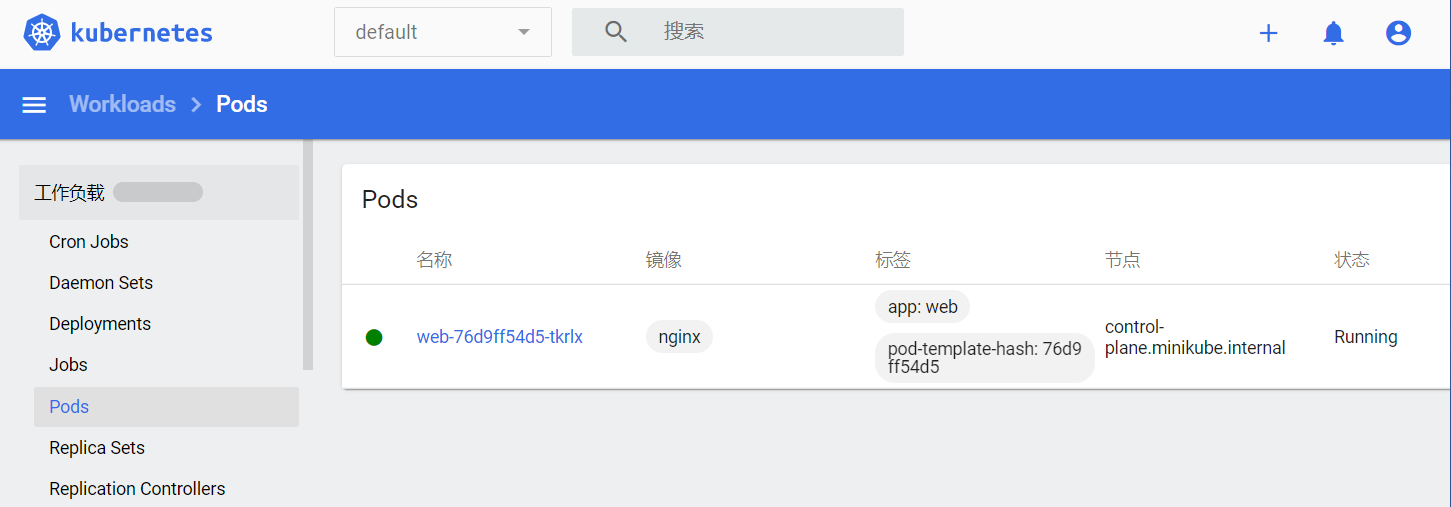

WEB UI

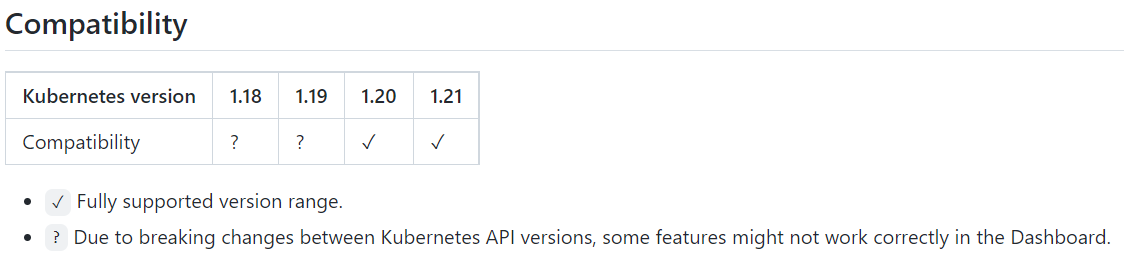

https://github.com/kubernetes/dashboard/releases/tag/v2.4.0

注意版本的支持情况,这里用的k8s为1.20.4,所以要用2.4.0版本

下载

1 | wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.4.0/aio/deploy/recommended.yaml |

找到

1 | kind: Service |

修改为

1 | kind: Service |

其中添加了nodePort: 30020和type: NodePort

运行

1 | kubectl apply -f recommended.yaml |

这时候就可以访问了

创建用户并绑定默认cluster-admin管理员集群角色:

1 | kubectl create serviceaccount dashboard-admin -n kube-system |

显示

serviceaccount/dashboard-admin created

输入

1 | kubectl create clusterrolebinding dashboard-admin --clusterrole=cluster-admin --serviceaccount=kube-system:dashboard-admin |

显示

clusterrolebinding.rbac.authorization.k8s.io/dashboard-admin created

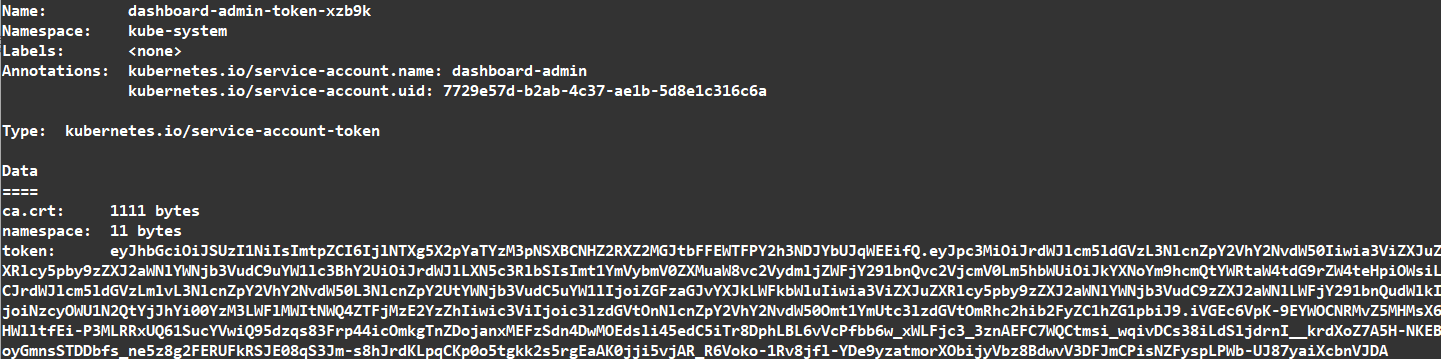

获取token

1 | kubectl describe secrets -n kube-system $(kubectl -n kube-system get secret | awk '/dashboard-admin/{print $1}') |

可以看到